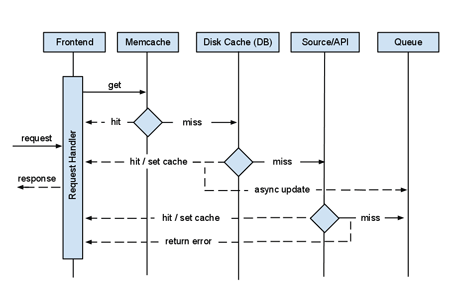

Stale cache serving strategy with reactive flush

This is a cache I have created that allows me to always serve the user cached data, i.e. never serving a miss. There are times when some data is just too complicated to make the user request thread wait and other times you just want to be sure you have high availability with no user facing recompute time at all. This is a great technique for template caches and API wrappers which I have employed more than once in my career.

I achieve the goal of no cache misses by first building what I call a persistent cache; memcache backed by disk or database. This way if memcache ever takes a miss I look in the database and pull the result from there. I then recache that result in memcache for a short amount of time and return the response to the user. At the same time, I then put a task on my task queue (MQ) to go recalculate the data and then update my persistent cache. If all caches are empty then I hit the API and upon success, cache the results and return the data to the user. If the API throws an error I put a task on the queue and hopefully the next time the user requests that data the cache will be warmed upon a successful API query.

Essentially this creates a type of MRU cache, only using memcache for frequently used data. I could use cron or something like it but I like that my users and bots are the ones who flush my caches based on demand rather than time or some other indicator.

(click diagram for a larger version)

The diagram above describes how I built the caching for Klout Widget, a hack I created just to demonstrate this strategy. Their API was a little finicky so I decided to create a persistent cache that would be resilient to bad or missing data. Go ahead, give it a try.

Anyway, hope this strategy is useful. If anyone knows the name for this type of cache I’m all ears. As far as I know its something I dreamed up.

Categories: Computers, Software, Web

This is great, particularly for those working with a finicky API – Klout’s has been the worst in my recent memory. I wrote a script that generates a badged Klout score from the average score of users on a particular Twitter list. Good for sharing the average Klout of your team/organization. I found caching Klout score data essential as they only seem to update daily and ~25% of my API requests failed for no apparent reason. The other half of that script used the Twitter API, which performed flawlessly.

Shouldn’t web services like Klout handle the load management and QoS? I understand this is a great proof of concept on your part but I’d like to see the companies that profit most from these services implement this to shore up their wobbly API.

Great work!

Hi John,

Your Klout widget isn’t working for me (Twitter handle @bjorn). It’s just displaying a question mark where the number should be.

Any idea why not?

Thanks,

Bjorn

That is odd. Honestly, I built this thing so long ago and I don’t maintain it anymore. IIRC, it should timeout and start working on its own. It should work tomorrow.

If not, I’ll do my best to find some time to open source the software so others can work on it and fix it.