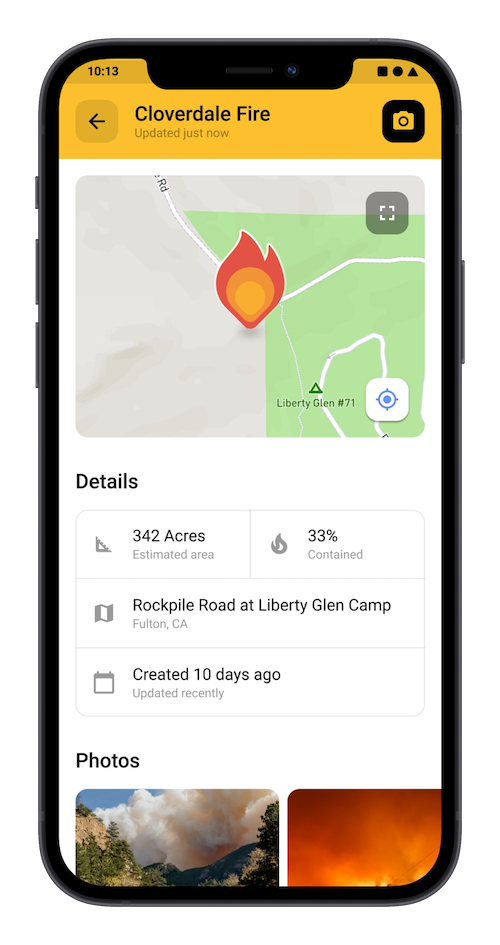

The past ninety days have been some of the most exciting and challenging of my career. I am deeply moved by the generosity of my community, both in Silicon Valley and the Russian River Valley, that came together to create the first citizen driven disaster intelligence platform.

With almost no capital and only donations of human capital and services from companies like Zenput, Amazon Web Services, Heroku (a Salesforce Company), and Sixième Son, we went from a team with an idea to deployment to growth in one quarter.

As many of you know, I left my company a little less than one year ago to build my first non-profit, the Sherwood Forestry Service. It was founded with the belief that given the right environment and leadership with no money changing hands we can take idle human potential and make it dangerously kinetic.

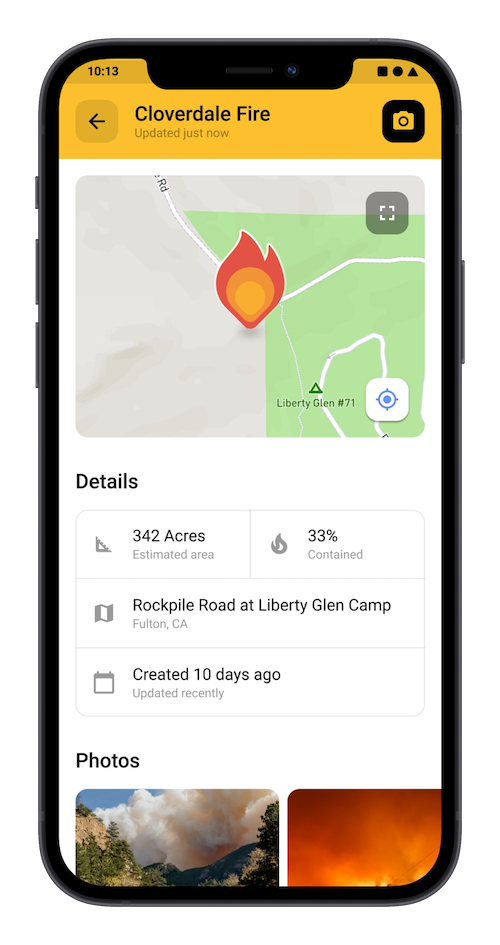

Today, I am happy to announce that we have done just that with the public release of Watch Duty. Our application is piloted by skilled radio operators, firefighters, and citizen information officers who will provide up-to-the-minute information pushed directly to your phone. Citizens will also be able upload geotagged photos of what they’re seeing on the ground to help first responders and other citizens find danger. Our goal is to help discover and track wildfire hazards in real time without having to look for it. What we are creating will be the most actionable and accurate system of record for disaster intelligence built to date.

Today marks the last day of my eight year journey as CTO of Zenput. For these past eight years I have dedicated my life to this company, our customers, the friends who’ve joined, and the friendships forged along the way. From the humble beginnings around my dining room table with just Vladik and myself trying to convince our friends to join (all of you are still here!) to a multinational corporation doing eight figures in revenue, this has truly been one of the most exciting and challenging projects of my lifetime.

As many of you have heard me say over the years, my goal has always been to make myself replaceable. That’s a statement that I made at the founding of this company and one that I stand behind today. It has taken the better part of a decade to get here but now that I am completely confident in the company’s future success that I can safely say my mission has been accomplished.

I would like to thank all of you Zenputers for making this possible. Without your capable hands and leadership I would never be able to step away from operating. If there’s one thing I’d like you to take away from my departure it’s that you are great at what you do. It’s that greatness and sense of agency that you all have that has allowed me to move on to pursue other personal endeavors. Thank you.

As for me, I will remain on the board and be available to help whenever and wherever needed but for the time being I am going to retire in the foothills of Sonoma. Maybe one day I’ll build another team half as good as this one but until then you’ll find me working with my hands on the ranch.

As for all of you, you’ll continue to build, market, and sell the most robust execution operating system for public health and safety in the world with empathy, curiosity, and systems thinking [our company values]. I’ll be cheering from the sidelines and helping from a board level wherever necessary. Although my time as an operator here is over, my commitment to achieving a positive outcome for our customers and ourselves has never been stronger.

I wish you all the best of luck and enjoy the rest of the journey!

Over a decade in the making, I finally took the plunge and bought a school bus to convert it into an Orient Express style train car for the highway. It’s been aptly named the Disorient Express.

This is something I’ve been thinking about ever since moving to California in 2004 and riding in friends buses and art cars at Burning Man. In particular I’ve always had my eye on Crown Coach school buses. Besides their beautiful 1950′s styling, they were well built with standard big rig parts making it easy to maintain and the skin is aluminum so it won’t rust. I almost bought one back in 2007 but the buyer backed out at the last minute so I abandoned the idea knowing one day I’d get back to it.

That day came in November of 2017 when I was browsing eBay and Craigslist as I like to do from time to time. This beautiful 1988 Crown from Merced School District came up for sale with only 84k miles on it. I knew right then and there that this was what I had been waiting for.

A few days later the project was in full swing. I had friends coming by to help to remove all the seats and get the bus ready for its new life; a dining car and lounge suitable for entertaining as well as a sleeping quarters, bathroom, and kitchen. This project has been a huge undertaking including extensive woodworking, plumbing, and electrical. None of this would be possible without the help of many willing and able friends who are just crazy enough to follow along for the journey.

Here are few photos of what the bus looks like now but if you’d like to follow along with the progress checkout the Disorient Express on Instagram.

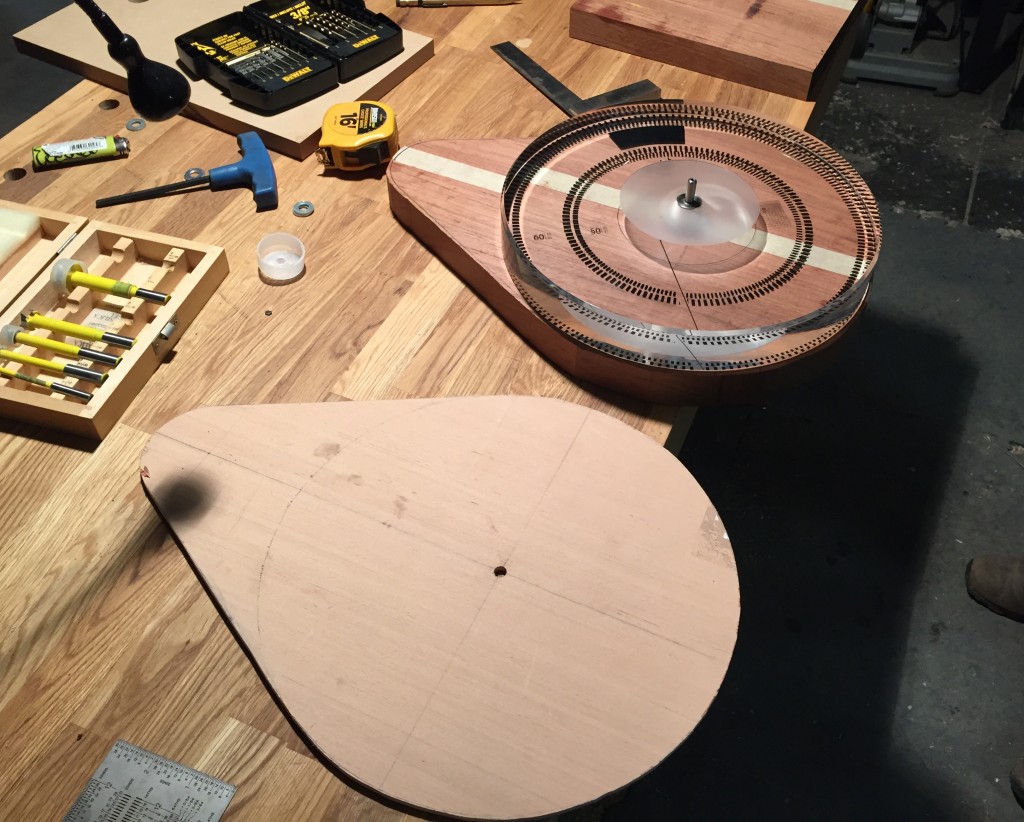

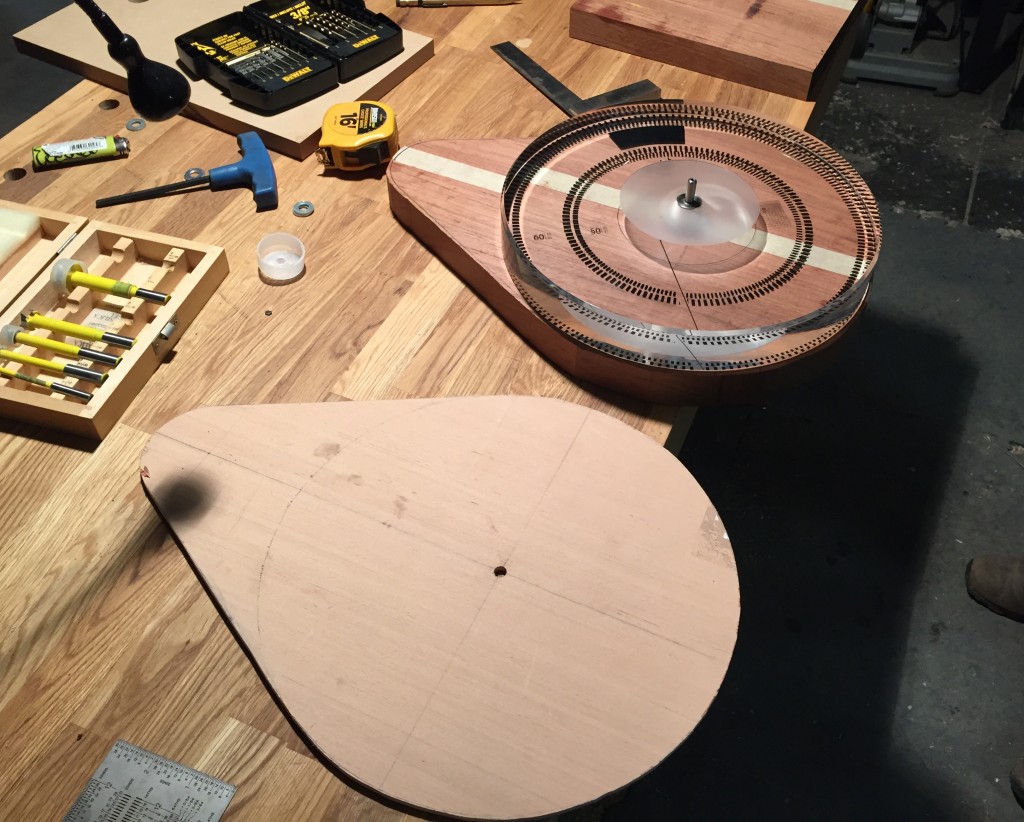

Nearly eight years ago I began building a record player. Many projects have gotten in the way between then and now but I’m happy to say the project is finally complete thanks to the help of my friend Brian.

As you can see from the sketch above, the goal was to create a teardrop design. Something simple and elegant. This was the only part we actually had to fabricate ourselves.

We used Jatoba wood and machined it with a band saw and a router. First we made a template out of 1/4″ plywood as our guide. This also was our template for using the router to get a perfect fit.

The motor, platter, and bearing were all sourced from DIY HiFi Supply. The tonearm is a Rega 202 that I bought from eBay. Its a decent starter tonearm and not too expensive.

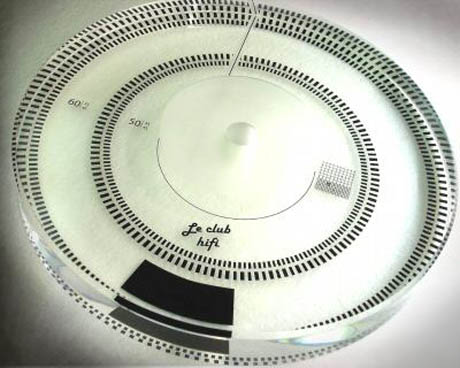

This platter was laser cut out of 40mm thick acrylic at a final diameter of 298mm. Its truly is a beautiful piece of work. The markings on the platter are for timing it with a strobe. Below is a photo of the bearing that I ordered along with it. It too is a very nicely machined piece of work with a self-oiling design using rifles to push the oil back up to the bearing. When everything is all connected together it turns very smoothly.

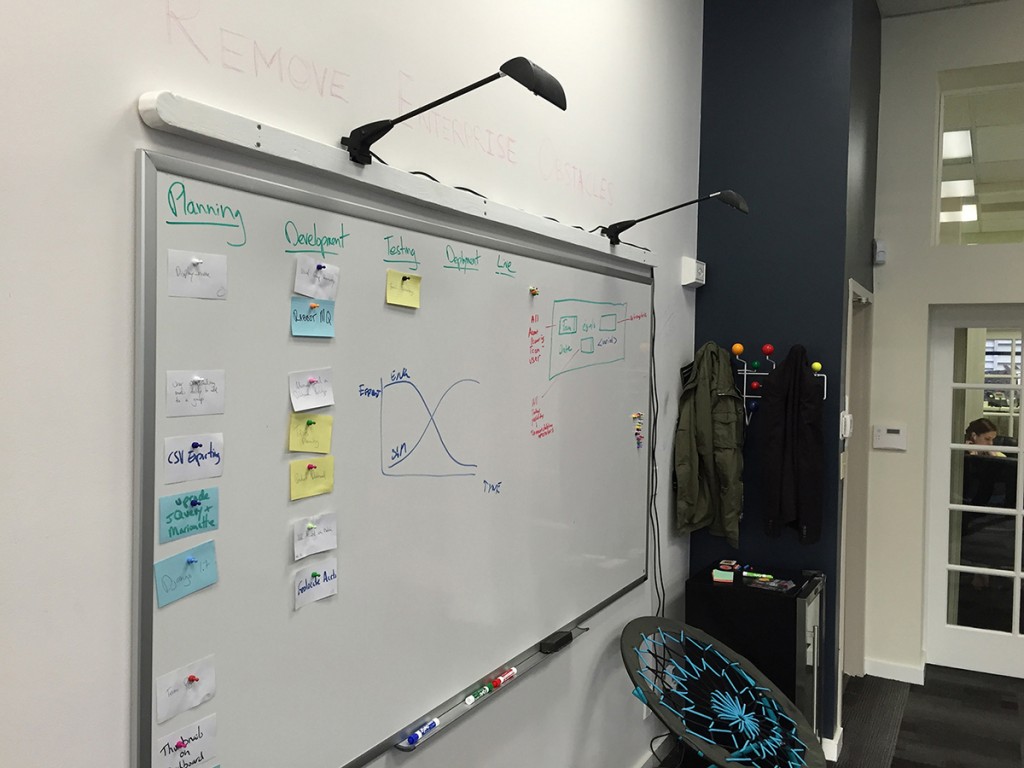

San Francisco is going through a major housing and commercial real estate crisis with no sign of decline. People are going through great lengths to stay here using creative solutions like communal housing to live and sleep and coworking spaces to work. At Zenput, the company I cofounded, we too started out in a coworking space. This was a great solution for us to get our company off the ground but as we grew in numbers we also had to grow our space.

When we began to look for office space in May of 2014, the average cost per square foot was nearly $60 a year. This is a crippling number considering we are an early stage company so we set out to find creative solutions. A few things we considered were:

1. Staying in our current coworking space

2. Sharing space with another startup

3. Moving out of the city

4. Moving to a less desirable neighborhood

In the end, we decided we couldn’t leave the city that we loved and we also knew that having 10 people in a coworking space just wasn’t practical. Also, sharing a space with another startup was almost the same as a coworking space. Ultimately we decided to find our own private space to help our team grow.

After looking at dozens of beautiful office spaces with high ceilings, lots of light, and debilitating price tags, we began to look on the outskirts of more desirable neighborhoods. Oddly enough, we found a beautiful 2400 square foot space right down the street from Twitter and Uber for less than $40 a square foot.

At first glance the space was quite uninspiring with stark white walls, 12 foot tall drop ceilings, and horrendous fluorescent lighting. All of these flaws combined with the location is most likely why it was priced so cheaply. All it needed from us was a little creativity to make this place somewhere that we looked forward to seeing in the morning.

In keeping with our lower budget theme, we employed the following techniques to personalize the space:

1. Paint an accent wall or two with our company colors

2. Buy floor/wall lamps to avoid using the fluorescent ceiling lights

3. Create a white board/chalk board wall

4. Add texture to the walls (we found clapboard siding at a salvage yard)

5. Bought chairs from failed startups on craigslist

6. Bought cheap trestle style desks from IKEA

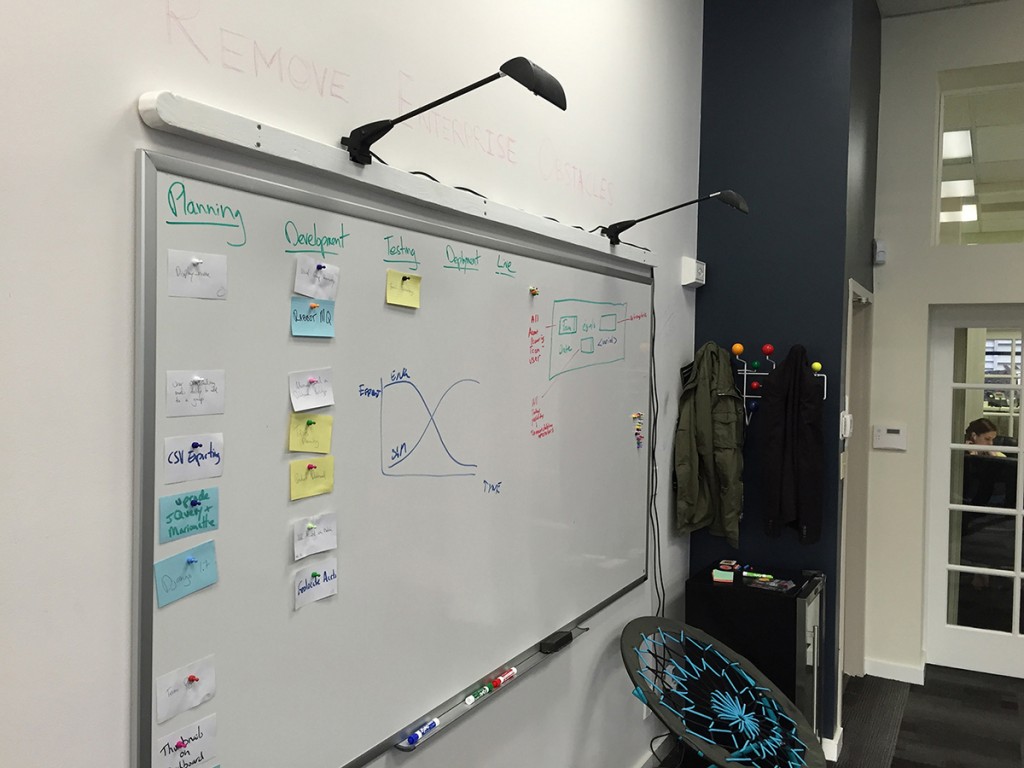

Below are some of the work in progress photos

(recycled siding we found at a salvage yard)

(cheap whiteboard and lights we recycled from a trade show booth)

(vinyl sign we had made from our logo)

All in all, this isn’t hard to accomplish on a budget. We just had to be creative and do the labor ourselves. It was a fun team bonding exercise and a great learning experience for all who helped. To see more photos of the build, checkout the (Flikr) Album.

This article was originally published in Forbes.

As a technical co-founder, the second most-asked question I receive (behind “where do I find a technical co-founderâ€) is from engineers asking me, “What language/framework/ new-hip-thing should I learn?â€

This question has always puzzled me, especially when it comes from seasoned veterans who have well-respected jobs. Although my alma mater taught me this way as well, this is not the way I think — and neither should veteran engineers. Why would you want to learn yet another language, when that language is nothing more than a set of instructions that tell a machine how to behave? Any engineer worth his salt can pick up a new language in a matter of weeks. The same goes for a new framework. If you’ve been writing software for 10 years and you’re worried about the new hot framework out in the world, you’ve lost the forest for the trees.

The bigger question is, how do you take your knowledge of language and software systems to hack real-world systems? I look at this as a form of social engineering; instead of the goal being to teach a machine how to behave, you are attempting to create a machine that mimics the way humans behave. Or better yet, teach the machine to behave the way the user expects it to behave.

This is the difference between a good product and a great one, and it has nothing to do with software. Companies like Twitter had to change the way humans behaved. It was a long and arduous road, and people still don’t ‘get Twitter,’ whereas companies like Apple and Facebook have painstakingly studied human beings and purposely built products around their needs.

I can’t tell you the amount of times that I’ve come into an engineering meeting where one engineer is bragging to another that in five lines of beautifully succinct code they have been able to complete their task in record time with flawless execution. But I can count on one hand the number of times an engineer has bragged that they changed five lines of HTML and upped the engagement rate 10% on a feature that everyone perceived as useless. A hack is a hack, and that’s what engineers do. I don’t care if it took zero lines of code or a hundred and a roll of duct tape to complete the task, and guess what? Neither do your customers. The product is all that matters.

Both Facebook and Apple have taken this to extremes by hacking human perception. Facebook recently discovered that by changing their loading animation icon to what iOS and Android natively do, the user couldn’t tell if the slowness was coming from their phone, their carrier, or Facebook itself. This simple, genius hack saved so many man hours and millions of dollars of server time it’s hard to even quantify the impact. Apple has done something similar by creating a stripped progress bar that scrolls at just the right speed so you feel as though it is moving faster than it actually is.

These hackers were so perceptive and audacious that rather than engineer a faster application, they decided it was easier to change the users’ perception of time. All of this by changing very little code and no infrastructure.

But let’s get back to the engineer’s original question. I use my current team of three engineers to prove my point. When I began hiring back in May of 2013, not one of them knew our native language of Python, and the other two had barely written any JavaScript (which we have a lot of). Less than a year later, I now have three full-stack engineers who are capable of writing complex interactions on a JavaScript-heavy front-end stack all the way to organizing complex schemaless datasets. If I had attempted to hire three full-stack engineers out the gate, I would be lucky to end with one. Instead, I found three humans who are willing and able to think about the bigger system and realize that often, the best code is the code not written.

Next time you’re hiring an engineer, don’t just ask them the usual whiteboard questions and what they learned in college 10 years ago. Your job is to find out if they are willing and able to program the way users behave — or just program computers.

Last May my friend Kyle Stewart, Executive Director of ReAllocate, came to me last May and asked, “what would you do with a 14,000 square foot warehouse in the heart of San Francisco?†Assuming this was a loaded question I first asked him how much it would cost. He assured me that it wasn’t a concern of mine and told me to grab my tools because we had work to do.

It turns out that our friend Mike Zuckerman had secured a warehouse at 1131 Mission Street for just $1 for the month of June to conduct a civic experiment. A ‘culture hack’ as he refers to it; repurposing otherwise unsused or private properties for different uses then they were intended. For me it was about creating a modern day community center run by hackers, completely based on merit, not money. If you could swing a hammer, paint a portrait, program a computer, or organize a coup you were given a canvas in which to experiment.

Much like how children rally together to build a treehouse with no reservations, we did the same. This all happened so fast and there was no time to fuss over governance, planning, and structure. Traditonal democractic process was thrown out the window and its place we were left with an adhocracy. Where immediacy, merit, and vision collide.

And so, starting in June the great experiment began. For the entire month the place was hopping with excitement from ten in the morning to ten at night. There were free yoga classes, free drawing classes, and free dance classes. There were artists painting murals on every flat surface you could find and hackers soldering away at LED light projects. Artists were working with entrepreneurs and the homeless were interacting with computer programmers. Everywhere you looked you would find people mixing ideas and cultures.

After only a few weeks of being open, we realized that one month just wasn’t going to cut it. In true Freespace fashion, we gathered a headless team of volunteers together to start a crowd funding campaign and media blitz to raise $24k to pay the full rent the following month. Sure enough, we had enough press coverage and touched enough people that we were able to raise the money and continue our experiment.

Fast forward 6 months later to today, Freespace in its original incarnation is gone but the idea is spreading. One just opened up in the heart of Paris and the city of San Francisco gave us a grant to do another one on Market Street. In only 6 months the conversation has changed from us begging and pleading for space, to now where building owners are contacting us to activate their vacant spaces. Requests are coming in across the globe as the idea and the vision spread, farther and wider than any of us ever imagined.

Mike has now made it his mission to help foster its growth and he is currently on airplanes every week to do just that. So if Freespace or Mike comes to your city or town, please stop by and say ‘hi’.

Right before Burning Man this year the Huffington Post reached out to me to do a live interview about the event, specifically about its shared economy. Neither the producer nor the host had been there so naturally they had a lot of misconceptions about what actually goes on. From what they had heard, the shared economy is the emphasis of the event.

The other two guests and I were able to shift the focus onto the event itself and less about how it’s “shared economy is changing the world”. At the end of the day, Burning Man is many different things to many different people. The shared economy of Burning Man is only one of the 10 principles that makes this event so special. Everyone takes something different home from the event and its just something you have to experience.

Watch a recording of the interview on Huffington Post.

A few months back my friends Kyle and Nick joined me in an endeavor to build a chicken coop for our friends at the Avolon Hot Springs. Avalon is an amazing place high above Middletown, CA in the mountains of Lake County. It dates back to the 1850′s when it was called Howard Hot Springs and catered to the rich and famous Victorians.

Today it still retains a lot of its history with original springs still on the property, rustic cabins, and a beautiful main hall. We were happy to help out and add to the charm on Avalon. Also, fresh eggs when we visit are a plus.

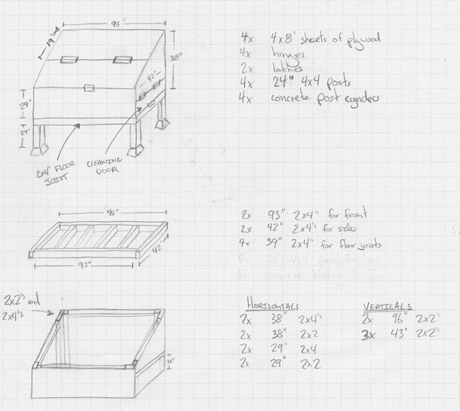

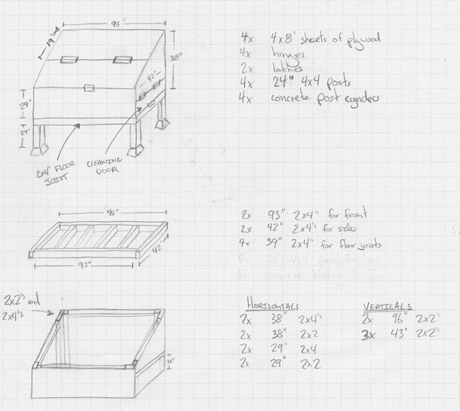

Below are some of the photos and plans. We precut and built most of it in my basement and then transported it up there. Even with that pre-planning it still took us a day and a half to assemble and paint it.

(We added a door for cleaning and a thick plastic floor for easy cleaning)

(We used organic recycled materials for the insulation)

(The plans I drew up. Click the photo for a larger image)

All in all it was a very fun and rewarding project. It’s also quite simple and the average DIY’er should have no problem tackling it. It was all of our first times too! For more photos, check out the chicken coop build photo set on Flickr.

A few months back my friend @nick showed up at my door with an idea and a cardboard box full of wood. He and his coworkers from Twitter were building a kiosk that would take photos and tweet them from @twisitor. Naturally, I thought the project was hilarious so we headed down to the garage.

The wood he brought was a nice veneered bamboo with a bull-nosed edge on one side which gave it a nice finished look. The shingles were cut by @nick and his cohorts from cheap shims they got from the hardware store. The nailgun made quick work of the project and it turned out great! Everyone liked it so much that it ended up in the lobby. Thanks @nick for a fun and random project!